Generative AI: The Basics

On this page:

What is GenAI ?

Basically, GenAI is a type of artificial intelligence that generates content in response to written prompts. All kinds of content can be generated including: text, images, videos, music, and programming code.

GenAI models are trained on very large datasets. Datasets for text generator tools are trained on books, articles, websites, and social media content – some of which may include copyrighted materials. Image, audio, and video generators are trained on extensive sets of images, audio and video clips. There is also the ability to upload your own content – which may or may not be added to the training model.

These models learn to recognize patterns in the datasets and create predictive models for generating responses to written prompts. It generates responses to queries (or “prompts”) through statistical analysis of the data input (e.g. distribution of words in a sentence or pixels in an image) and by identifying and repeating common patterns.

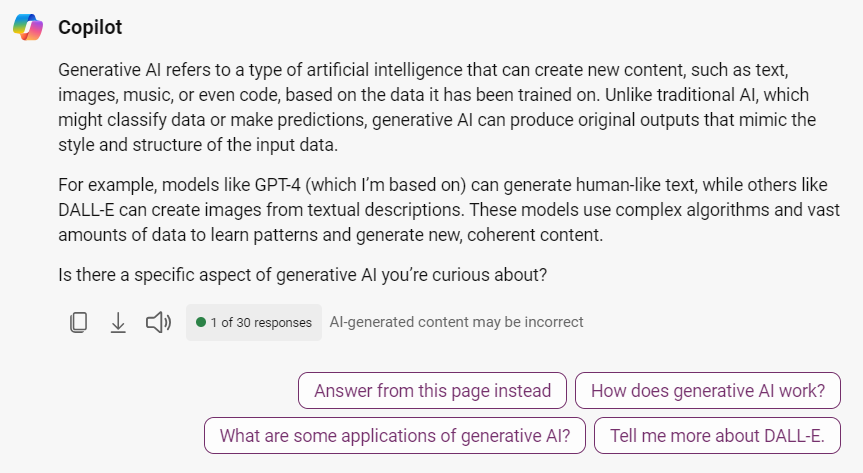

EXAMPLE: Prompt for Copilot was “What is Generative AI”.

What is the Difference Between a GenAI Model and a GenAI Tool?

A GenAI model is the technology (e.g. algorithms) that enables content generation.

A GenAI tool is a service or user interface that allows you to interact with the GenAI model.

ChatGPT is a popular an example of a GenAI tool that is based on a type of GenAI model called a Large Language Model (LLM) – algorithms that learn context and meaning by tracking relationships in sequential data such as words in a sentence.

ChatGPT is a popular an example of a GenAI tool that is based on a type of GenAI model called a Large Language Model (LLM) – algorithms that learn context and meaning by tracking relationships in sequential data such as words in a sentence.

There are different “gpt” models with sub-v

GPT-4_o (o for “omni”) is the latest version of ChatGPT but has different versions that differ by cost, currency of training data, and ability to handle simple versus complex tasks, and speed. There are various pricing options – depending on types of tasks you do. Asking it to do a Google-like search, for example, requires less processing than doing complicated data analysis with large sets of data. This will impact the model version you purchase – including the number of tokens available to you in a session.

GPT-4_o mini is an affordable version that can do small, lightweight task quickly. Training data is currently up to October, 2023 (as of August, 2024).

Other LLM’s include Gemini (from Google), Claude, and Llama (from Meta).

At Algonquin, faculty and staff have access to Microsoft Copilot for doing chat-based queries. This tool is based on OpenAI’s ChatGPT technology.

Note: It is important to log into Copilot with your Algonquin credentials in order to have organizational data protection. Make sure that you see the green shield icon. This will ensure that the prompts that you type in and the information that you upload won’t be included in the training model. This ensures privacy of the information you upload.

How “Smart” Are GenAI Tools?

Text GenAI tools, such as ChatGPT, have an interface that supports human-like conversations. However, large language models (LLMS), which are behind how tools like ChatGPT work, “present incorrect guesses with the same confidence as they present facts.” Furthermore, “…they are designed to be plausible (and therefore convincing) with no regard for the truth” (Véliz, 2023).

Accuracy of generated results continues to improve substantial with each model update. However, it is still prone to making up certain kinds of information – including references.

Create Prompts

When you ask a GenAI tool to do something, the text instructions that you provide is called a prompt. Often, you will engage in a conversation with the tool, adding clarifications as you go, in order to get the results that you want.

The process of creating prompts has been coined prompt engineering – but don’t let this term intimidate you. It simply reflects that you can instruct the GenAI tool to do certain things. While there are developers using GenAI models to create complicated things, a regular, non-technical person easily produce useful results with a basic structure – and some practice.

The general practice involves explaining:

- The Problem: Describe what your need is.

- The Output Structure: For example: “Answer in a bulleted list,” “Respond in fewer than 100 words,” “Provide three examples”, “Answer in the form of a limerick”

- The Communication Tone, Style, or Personality: Basically, how you want it to communicate with you. For example, “answer the question as a patient math teacher”. Also, provide contextual details, unique knowledge, or special tools that they might need to meet your request.

Elements of a good prompt may include:

- Role: Tell the AI who it is. Context helps the AI produce more tailored results. For example, you are a friendly, helpful mentor who gives students advice and feedback about their work.

- Goal: Tell the AI what you want it to do. For example: give students feedback on their [project outline, assignment] that takes the assignment’s goal into account and pinpoints specific ways they might improve the work.

- Step-by-step instructions. Providing brief instructions can be effective. You can try this to get the specificity that you want.

- Add your own constraints. You can specify the kinds of things that you do not want to see in the results.

View these resources for more information:

- How to approach prompting of AI chatbots (University of Kansas, Center for Teaching Excellence)

- A Teacher’s Prompt Guide to ChatGPT Prompt ideas for various teaching and learning activities.

What is GenAI Good at Doing?

Tools like ChatGPT can significantly speed up work processes and improve the quality of work. They are great for:

- Brainstorming ideas (e.g for assignments, discussion questions etc.)

- Answering questions

- Explaining things in easy to understand ways

- Summarizing and outlining information

- Composing first drafts (e.g. emails, papers. social media posts etc.)

- Preparing test questions and evaluation rubrics

- Proofreading written work

- Translating text to different languages (though is not completely fluent in every language)

- Helping to write or debug computing code

See Opportunities with Generative AI.

What is It Not Great At?

As a predictive model that prepares results based on algorithms, GenAI lack common sense, emotional intelligence, and an understanding of the nuances of natural human language. They can take on human-like conversational styles when presenting information. However, the results may not always be correct or even make sense. Also, they do not fully “understand” math to do complex problems correctly.

Therefore, ChatGPT is not good at:

- Solving complex math problems

- Responding to unexpected questions within a conversation prompt.

- Always providing correct information. It can make up information – including resource citations that do not exist.

- Providing context-specific information (though this may be mitigated by including context cues within your prompts)

- Output may be biased based on what is in the training dataset and how it was fine-tuned.

Concerns Related to Training GenAI Models

Impact on the Environment

It is estimated that the training of GPT3 used 1,287 megawatt hours of electricity and generated 552 tons of carbon dioxide – or the equivalent of driving 123 cars for one year (Generative AI Hub, UCL). The immense processing power required to train these models creates a large carbon footprint.

Human Costs

Part of the data training process for GenAI models, before they are used, includes a refinement process called Reinforcement Learning from Human Feedback (RLHF). This involves having individuals review GenAI responses for accuracy and appropriateness. This includes reviewing for alignment with “guardrail” criteria to prevent the generation of objectionable material. During the development of ChatGPT, RLHF reviewers were outsourced labourers from countries, like Kenya, and were paid less than $3 per hour to review data that included disturbing content.

There are other important GenAI use considerations including bias and negative stereotypes in datasets, concerns about equitable access to the the technology, data security and privacy as well as accuracy of output. Though, these issues are being actively worked on and improvements are being made. See Generative AI: Limitations.

A Quickly Changing Landscape

The dynamic, self-learning nature of these tools coupled with a competitive, evolving AI marketplace has created a context of constant, rapid change. The ChatGPT, Copilot, or Adobe Firefly tools that you use today is not the same as it was a few months ago. Training and tuning of AI models may improve performance in certain ways while modifying behaviour in others.

The good news is that using GenAI tools for regular kinds of queries and tasks is not difficult and will likely become easier for the average person. Especially as they are incorporated into other tools we are familiar with. For example, Microsoft’s integration of Copilot into Office365 programs such Outlook email, MS Word, PowerPoint, and Excel.

References

Generative AI Hub (n.d.) Retrieved January 5, 2023, from https://www.ucl.ac.uk/teaching-learning/generative-ai-hub/introduction-generative-ai

Véliz, Carissa. (2023, August 1). What Socrates Can Teach Us About AI. Time. https://time.com/6299631/what-socrates-can-teach-us-about-ai/